Data Ethics Assignment On Word Embedding

Question

Task:

Task 01

Conduct independent research and compile a review report on the use of word embeddings in

business and its possible ethical issues. Your report should include the following requirements in

order:

a) Describe two possible applications of word embedding in business. (4 marks) Hint: For each application, mention what are the motivations/benefits, how it works, what datasets are involved and its results (if known), etc.

b) Discuss two popular implicit biases that usually occur in word embedding applications and their possible ethical issues.

Hint: Describe each bias, give examples and explain why and how biases occur and may lead to ethical issues.

c) Suggest two most important measures/best practices that you think can be used to alleviate the ethically significant harms of these bias problems. Provide justification of your choices and challenges of implementing these measures.

Hint: Your suggestions should align with the harms that you have discussed in the previous section (question 1b). You may review the lecture slides and select the relevant knowledge points. You may also need to perform research on literature to explain and support your points.

Task 02

There is a case study provided and you are required to analyse and provide answers to the questions

outlined below. You can use lecture material and literature to support your responses.

Fred and Tamara, a married couple in their 30’s, are applying for a business loan to help them realize

their long-held dream of owning and operating their own restaurant. Fred is a highly promising

graduate of a prestigious culinary school, and Tamara is an accomplished accountant. They share a

strong entrepreneurial desire to be ‘their own bosses’ and to bring something new and wonderful to

their local culinary scene; outside consultants have reviewed their business plan and assured them

that they have a very promising and creative restaurant concept and the skills needed to implement

it successfully. The consultants tell them they should have no problem getting a loan to get the

business off the ground. For evaluating loan applications, Fred and Tamara’s local bank loan officer

relies on an off-the-shelf software package that synthesizes a wide range of data profiles purchased

from hundreds of private data brokers. As a result, it has access to information about Fred and

Tamara’s lives that goes well beyond what they were asked to disclose on their loan application.

Some of this information is clearly relevant to the application, such as their on-time bill payment

history. But a lot of the data used by the system’s algorithms is of the sort that no human loan

officer would normally think to look at, or have access to—including inferences from their drugstore

purchases about their likely medical histories, information from online genetic registries about

health risk factors in their extended families, data about the books they read and the movies they

watch, and inferences about their racial background. Much of the information is accurate, but some

of it is not. A few days after they apply, Fred and Tamara get a call from the loan officer saying their

loan was not approved. When they ask why, they are told simply that the loan system rated them as

‘moderate-to-high risk.’ When they ask for more information, the loan officer says he doesn’t have

any, and that the software company that built their loan system will not reveal any specifics about

the proprietary algorithm or the data sources it draws from, or whether that data was even

validated. In fact, they are told, not even the system’s designers know how what data led it to reach

any particular result; all they can say is that statistically speaking, the system is ‘generally’ reliable.

Fred and Tamara ask if they can appeal the decision, but they are told that there is no means of

appeal, since the system will simply process their application again using the same algorithm and

data, and will reach the same result.

Provide answers to the questions below based on what we have learnt in the lecture. You may also need to perform research on literature to explain and support your points.

a) What sort of ethically significant benefits could come from banks using a big-data driven system to evaluate loan applications?

b) What ethically significant harms might Fred and Tamara have suffered as a result of their loan denial? Discuss at least three possible ethically significant harms that you think are most important to their significant life interests.

c) Beyond the impacts on Fred and Tamara’s lives, what broader harms to society could result from the widespread use of this loan evaluation process?

d) Describe three measures/best practices that you think are most important and/or effective to lessen or prevent those harms. Provide justification of your choices and challenges of implementing these measures.

Hint: your suggestion should align with the harms that you have discussed in the previous sections (questions 2-b and 2-c). You may review the lecture slides and select the relevant knowledge points. You may also need to perform research on literature to explain and support your points

Answer

Introduction

The concept of data ethics and data science explored in the data ethics assignment are all about properly utilizing the data for the people and society. Data protection laws and personal human rights play a critical role in data ethics to keep the data safe from unauthorized access and leakage. Through proper utilization of data, the goal is to offer customized and optimum service to human beings. This data ethics assignmentaims to understand the application of words in business, implicit bias and ethical issues related to word embedding, use of data in banks.

Task 1

a) Describing two applications of word embedding in business

Word embedding is a natural language processing technique used to process word presentation where words with similar meaning are used for similar representation. Word embedding is used to represent the human understanding of words to machines by representing the words in n-dimensional space and solve most of the Neuro-Linguistic Programming (NLP) problems (Huang, 2017). Two applications of word embedding are discussed in the following section of data ethics assignment:

- Survey response analyzing Word embedding can be used to develop an actionable matrix from thousands of customer reviews. In every business, one of the ways to collect the feedback of their customers is by organizing surveys where a huge number of people give their feedback. Businesses do not have enough time and tools to analyze these responses thoroughly. Using word embedding can help the companies to interpret those feedbacks easily and incorporate that information in business. However, these customer feedbacks have a huge role in the Return of Investment (ROI) and brand value. Herein data ethics assignment, Word embedding is used by the companies to plot the feedback in a matrix (Gupta, 2019). This matrix represents the complex data-sets and relationship between the words to understand the responses in a specific context through data science and machine learning. This information provided in the data ethics assignmentsignifies that this helps the companies to understand and identify actionable insights for the businesses through the evaluation of those customer feedbacks.

- Music/Video recommendation system

Content businesses like YouTube, Spotify are booming right now, thanks to the recommending system it uses. Currently, the recommending system offers content recommendations which a user particularly like and understand. The aim is to offer that content which the user can enjoy. Personalized radio, podcast, playlists can make the recommendation more efficient by using Word embedding. The algorithm used in the recommendation system interprets the user’s listening queue as a sentence where songs are considered as words. Word embedding can accurately plot each song by a vector of coordinates which maps the context in the n-vector space (Pilehvar et al. 2017). This will improve the recommendation algorithm to the customers and will provide better content choices for them.

b) Discussing two implicit biases that occur in word embedding applications and ethical issues related to it

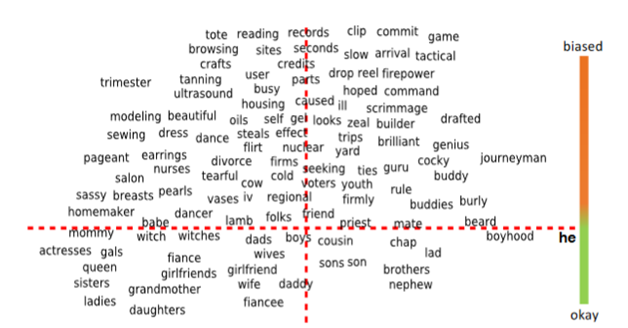

Bias 1: Showing stereotypical biases towards gender

According to the researchers, they have seen that word embedding algorithms show stereotypical biases such as gender bias. When using these algorithms in widespread applications like automated translation services, curriculum vitae scanners, it tends to amplify the stereotypes in an important context. In both word embedding methods (Word2Vec and GloVe) show stereotypical biases like the disproportionate association between the male terms with the science terms and female terms with the terms of the art (Zhao et al. 2019). Now, thispoint mentioned in the present section of data ethics assignment can create serious problems in the fundamental operation of those implemented systems. Employees, applicants can face problems during their job or recruitment process in those companies where these systems are used. Removing articles identified through using Word Embedding Association Test (WEAT) can impact the WEAT matrix in a predictable and quantifiable way (Gonen and Goldberg, 2019). This WEAT Matrix noted in the context of data ethics assignment shows a correlation with human bias. Through removing the small subsets in the training corpus can change the biased result.

Bias 2: Speech transcription shows higher error rates for African Americans than White Americans

Through understanding the language of human beings, speech recognition software translates the spoken language into the written language to perform tasks. However, in this transcription process, a bias can be seen between the African Americans and White Americans. According to the research on data ethics assignment, the rate of making errors while transcription for African Americans is double than White Americans which is unethical for the African American people. The system should work equally for both of these groups; instead, it shows bias in its operation (Dorn, 2019). This translation imbalance creates serious consequences for the careers and lives of these affected people. Companies use speech transcription during automated interview process and courts use this to transcribe hearings which will create biasness among different people.This issue mentioned in the data ethics assignmenthas been found in all the leading language transcription systems developed by Apple, Google, IBM, Amazon and Microsoft. The main reason behind this error is different speech patterns among African Americans (Stanford, 2020). The rate of error is maximum for African American male. The error was seen even higher for those people who use African American Vernacular English. The error rate for misunderstanding the words is 35% for African Americans where the rate is only 19% for White Americans.

c) Suggesting two measures that can be used to alleviate the ethically significant harms of the aforementioned bias problems

Still, now, there are several factors which are unknown to the people regarding this Artificial Intelligence and Word Embedding systems. More study is needed to tackle these biases in the AI systems. Two solutions for two of the aforementioned biases are illustrated in the next section of data ethics assignment:

- Bias 1: Using debiasing algorithms can be a great option to reduce gender bias in word embedding problems. These debiasing algorithms are defined as sets of words in the place of word pairs. The first step of debiasing is Identifying gender subspace which identifies a direction of the embedding process which locates the bias. The next step is Neutralizing and Equalizing or Softening. Neutralizing makes sure that the number of gender-neutral words in the gender subspace is zero (Bolukbasi et al. 2016). Equalize makes sure that the set of words which are outside the subspace are equalized and makes the neutral words equidistant to all the words in this equality set. The main challenge noted within the data ethics assignment in this process is there are several factors is still unknown to the developers and sometimes these debiasing process does not show adequate results.

- Bias 2: The speech recognition systems usually include the language model trained from text data and acoustic model trend from audio data. The main reason behind racial disparities is due to the performance gap in aquatics models. This point covered in the present segment of data ethics assignment signifies that the words and its accents also have to be diversified from the current condition in these systems so that it can properly work for every type of accent in English language (Koenecke et al. 2020). Through the inclusion of regional and non-native English accents in these systems, the overall effect can be improved to a great extent. The speech recognition system developers should regularly assess the progress and publicly report the progress with dimension. Inclusion of phonological, prosodic and phonetic characteristics rather than grammatical or lexical characteristics in these speech recognition systems can drastically reduce the error. The developers have to provide huge time to incorporate these accents to the system which will take huge time and resources.

Figure 1: Debiasing process

(Source: Bolukbasi et al. 2016)

Task 2

a. Ethically significant benefits from banks using a big-data-driven system to evaluate loan applications

Banks often miss out on offering customers due to lack of emotional attachment. However, the application of big data analytics can be useful for analyzing customer behavior by identifying their investment pattern, financial background and personal backgrounds. Business intelligence (BI) tools are widely used for leveraging the big-data system and identifying the potential risk in loan sanctioning (Datafloq, 2019). Market trends can be analyzed based upon the regional data and interest rates can increase or decrease as per the segment. As noted herein data ethics assignment, easy detection of frauds and errors can be identified by seeking help from big-data in real-time. 68% of bank employees are having concern about meeting with regulatory compliance in the banking sector. Big-data systems are also analyzing regulatory requirements through the use of BI tools, where the application of Fred and Tamara will be checked. Then the loan applications can be validated after fulfilling the regulatory compliance criteria with the analytical dashboard.

b. Ethically significant harms for loan denial to Fred and Tamara

Fred and Tamara are in the process of loan denial along with under the security risk due to the exploitation of their data disclosure to the software. Now, the software has all the accessibility to their bill-payments, drugstore purchase, medical histories, health risk factors, watched movies and others. The denial of the loan after accessing Fred and Tamara's personal information is not only a breach of law or regulatory framework but also a threat towards their livelihoods. TheLack of fairness leads to risks of damage related to reputational, psychological and even economic devastation along with loss of physical freedom of them. As per the investigation carried on the data ethics assignment, the exact reason behind the loan denial is unknown and leads to a mental breakdown among them and even causes violating the government norms.

- Harms to security and privacy

All of the data shared byFred and Tamara only leads to their life at risk due to the black web and also the involvement of third parties, which can even ruin their life permanently. The proprietary algorithm of the software not only breached their regulated performance but also neglected the rule of the bank, which is undeniable. The personal data exploitation in the Virtual platform or dark web leads to impact upon the economic, reputational and even emotional harms, which cannot be repaid by the bank by any manner. - Harms to justice and fairness

All the customers including Fred and Tamara have the right to be treated fairly, whether it is even the question of accessibility to their personal and confidential information. Continues inaccuracies, often hidden biases, avoidable errors, arbitrarinessin datasets leads to the common cause of harm to justice and fairness (Vallor, 2018). Most of the banks are intended to maintain their regulatory compliance for maintaining a fair policy. However, the bank selected in this part of data ethics assignment has been associated with big-data and involved in ethical failures due to lack of adequate design, implementation and audit of data practices, which lead to unfair and injustice towards them. - Harms to autonomy and transparency

The loan application gets denied from the bank authority, as per the norms the bank is bound to solve their questions about the denial of the loan application. Instead of that, the bank follows in-transparency by making them restrict from taking legal action. However, the back authority has suspected the software package, which they are used for granting and assessing the loan application by pointing to its data practices and algorithms. The big-data is mainly based upon the machine learning algorithm and useful for dealing with large datasets. However, some problems occur during dealing with “deep learning algorithms” as the reconstruction of the machine’s reasoning is next to impossible (Mungai and Bayat, 2018). So, the exact reason for the loan application denial with ‘moderate-to-high risk’ is still not clear by Fred and Tamara, which is a real-time outcome that harms transparency and autonomy by the bank.

c. Broader harms to society due to widespread use of this loan evaluation process

The loan evaluation process not only harms Fred and Tamara but also makes a great harm to the existing society. Lack of transparency is the main concern, which makes the software vulnerable towards utilizing the data practices and datasets ethically. In the context of the case study considered to develop this data ethics assignment, the data practices and datasets used by the software package is not abided by the ethical norms due to the present value of machine learning language with algorithms. As a result, the bank authority does not even know the data sources for sanctioning the loan to Fred and Tamara. Additionally, the bank is lagging following the ethical norms and regulatory framework, which leads to negative intervention in the institutional sectors and social systems, further leads to lack of economic security, lack of educational outcomes, unfair practices in institution works and lack of health policies (Felt, 2016).

d. What are the three measures/best practices for preventing harms discussed within this data ethics assignment? Justify.

- Promoting transparency, trustworthiness and autonomy value

The bank authority and the software company can enhance the customer relationship aspects by focusing on the factors of transparency, trustworthiness and autonomy value. Reducing the ‘opt-out’ options and favoring the ‘opt-in’ options with clear choice avenues for data participants can enhance the value of transparency and autonomy, which further leads to trust in the loan officer as well as other banking sectors. Using privacy policies for maintaining company interests, privacy concerns related to big-data and transparency towards intellectual values abided by all the regulatory frameworks.Explaining any loan application denial or any banking complaints needs to be abided by the Government Transparency and Accountability Policies (2015). However, using Business intelligence (BI) tools are useful for making regulatory compliance with the software package to offer better customer-focused value and playing a sustained public trust including Fred and Tamara. - Designing security and privacy

Poor data security in the software used in the bank leads to virtual exposure of confidential data, which should never be shared with anyone. In the context of the case study analyzed herein data ethics assignment, Fred and Tamara need to have secured service from the bank, where their personal and confidential data are not exploited in any manner. Designing the privacy not only involved in the change in the existing technical design including algorithms, datasets and data practices change but also in organizational design, which involves resource allocations, incentives, policies and techniques (Hassaniet al. 2018). Changing the existing preset machine learning algorithms for upgrading the software to read “deep learning algorithm” for algorithms” reconstructing the machine’s reasoning is necessary. Regular auditing of the machines, as well as the accessed data of customers including Fred and Tamara, is recommended in the data ethics assignment. - Establishing accountability and ethical responsibility chains

Following ethical data,the practice requires to develop ethical responsibility chains along with higher accountability levels. Development of contingency plan by seeking advice from big-data experts, who can provide them with a diverse range of analytical data implementation ideologies. An ethical risk management plan also needs to be developed by which the bank authority and the software company also bounded to treat the customers with an ethical manner. The bank also needs to change its company policy for loan sanctioning to ensure that the customer relationship can get better by abiding by the regulatory frameworks such as the Data Protection Act (2018). They also need to set some improvised policies and frameworks for the software company to maintain their fairness and justice.

Challenges

All of the employees in the bank may not interest to make changes in the existing service and abided by the ethical consideration. The improvised policies may get failed if it cannot fulfill the demands of customers. Following the government policy blindly can leads to harm the behavioral approach as they are not intended enhance their performance? Lack of audit in data practices,lack of adequate design is another determinant stated in the data ethics assignment, which can act as a challenge.

Conclusion

From the above study on data ethics assignment, it has been concluded that word embedding has great potential to be successful in future, but it needs more improvement right now. As a result, Fred and Tamara can get a personalized loan and investment solutions based upon their financial status by big-data analytics. The brand recognition and reputations of the bank also get hampered due to biasing and lack of justice in its data accessibility. On the other hand, ethically using data can generate a wide range of benefits in context to society from improved health to better educational outcomes, economic security expansion and fairer banking services.

Reference list

Bolukbasi, T., Chang, K.W., Zou, J.Y., Saligrama, V. and Kalai, A.T., 2016. Man is to computer programmer as woman is to homemaker? debiasing word embeddings. Data ethics assignmentIn Advances in neural information processing systems (pp. 4349-4357).

Data Protection Act. 2018. Data Protection Act 2018. Available at: http://www.legislation.gov.uk/ukpga/2018/12/contents/enacted [Accessed on: 7.6.2020]

Datafloq. 2019. Big Data in Banking Services: Advantages and Challenges. Available at: https://datafloq.com/read/big-data-banking-services-advantages-challenges/3178#:~:text=Efficient%20risk%20management%20that%20helps,

sanctioning%20loans%20to%20potential%20customers.&text=Bank%20frauds%20often%20go

%20unnoticed,functioning%20of%20the%20banking%20services. [Accessed on: 7.6.2020]

Dorn, R., 2019. Dialect-Specific Models for Automatic Speech Recognition of African American Vernacular English.In Student Research Workshop (pp. 16-20).

Felt, M., 2016. Social media and the social sciences: How researchers employ Big Data analytics. Big Data & Society, 3(1), p.2053951716645828.

Gonen, H. and Goldberg, Y., 2019. Lipstick on a pig: Debiasing methods cover up systematic gender biases in word embeddings but do not remove them. arXiv preprint arXiv:1903.03862.

Government Transparency and Accountability Policies. 2015. 2010 to 2015 government policy: government transparency and accountability. Available at: https://www.gov.uk/government/publications/2010-to-2015-government-policy-government-transparency-and-accountability/2010-to-2015-government-policy-government-transparency-and-accountability#:~:text=The%20UK%20is%20a%20member,Board%20was%20established%20in%

202010.&text=These%20principles%20show%20departments%20how,data%20based%20on%20public%20demand. [Accessed on: 7.6.2020]

Gupta, S. 2019. Applications of Word Embeddings in NLP. Available at: https://dzone.com/articles/applications-of-word-embeddings-in-nlp [Accessed on: 7.6.2020]

Hassani, H., Huang, X. and Silva, E., 2018.Data ethics assignmentDigitalisation and big data mining in banking.Big Data and Cognitive Computing, 2(3), p.18.

Huang, R. 2017. An Introduction to Word Embeddings. Available at: https://www.springboard.com/blog/introduction-word-embeddings/ [Accessed on: 7.6.2020]

Koenecke, A., Nam, A., Lake, E., Nudell, J., Quartey, M., Mengesha, Z., Toups, C., Rickford, J.R., Jurafsky, D. and Goel, S., 2020. Racial disparities in automated speech recognition.Proceedings of the National Academy of Sciences, 117(14), pp.7684-7689.

Mungai, K. and Bayat, A., 2018, November.The impact of big data on the South African banking industry.In 15th International Conference on Intellectual Capital, Knowledge Management and Organisational Learning, ICICKM 2018 (pp. 225-236).

Pilehvar, M.T., Camacho-Collados, J., Navigli, R. and Collier, N., 2017.Towards a seamless integration of word senses into downstream nlp applications.arXiv preprint arXiv:1710.06632.

Stanford. 2020. Stanford researchers find that automated speech recognition is more likely to misinterpret black speakers. Available at: https://news.stanford.edu/2020/03/23/automated-speech-recognition-less-accurate-blacks/ [Accessed on: 7.6.2020]

Vallor, S., 2018.An Introduction to Data Ethics.Course module.) Santa Clara, CA: Markkula Center for Applied Ethics.

Zhao, J., Zhou, Y., Li, Z., Wang, W. and Chang, K.W., 2018.Learning gender-neutral word embeddings.Data ethics assignmentarXiv preprint arXiv:1809.01496.